FRom:

FABIEN SANGLARD'S WEBSITE

DECEMBER 25TH, 2012

GAME TIMERS: ISSUES AND SOLUTIONS.

When I started game programming I ran into an issue common to most aspiring game developers: The naive infinite loop resulted in variable framerate and variable time difference that made the game nondeterministic. It is a problem that Supreme Commander/Demigod developer Forrest Smith described well in Synchronous Engine Architecture (mirror).

When I started game programming I ran into an issue common to most aspiring game developers: The naive infinite loop resulted in variable framerate and variable time difference that made the game nondeterministic. It is a problem that Supreme Commander/Demigod developer Forrest Smith described well in Synchronous Engine Architecture (mirror).Since I came up with a simple solution in my last engine I wanted to share it here: Maybe it will save a few hours to someone :) !

Problem: Variable framerate and Variable timeslices.

When I started engine development I used a naive game loop that would:

- Retrieve the time difference (timeslice) since the last screen update.

- Update the world according to inputs and timeslice.

- Render the state of the world to the screen.

It looked as follow :

int lastTime = Timer_Gettime(); while (1){ int currentTime = Timer_Gettime(); int timeSlice = currentTime - lastTime ; UpdateWorld(timeSlice); RenderWorld(); lastTime = currentTime; }

With a fast renderer the game world is updated with tiny time differences (timeslice) of 16-17 milliseconds (60Hz) and everything is fine.

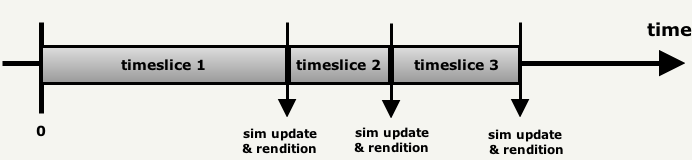

The key concept is that timeslices at which the game is simulated are variables in size :

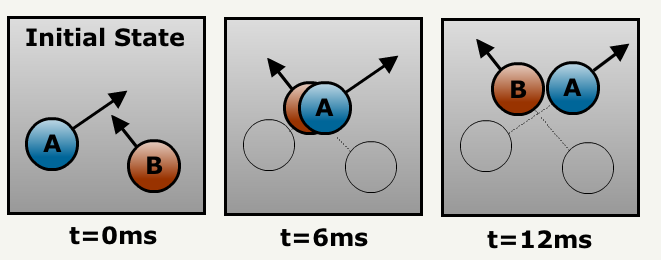

This architecture does work but is limited: The weakness in the design is that simulating the world accurately is tied to having small timeslices (a.k.a high framerate). Problems arise if the framerate drops too much: Updating at 30Hz instead of 60Hz may move objects so much that collisions are missed (In a game such as SHMUP that was a big problem):

There are algorithms to palliate to this problem but this naive design has many more flaws. The fundamental difficulty is not the length of a timeslice but its variability:

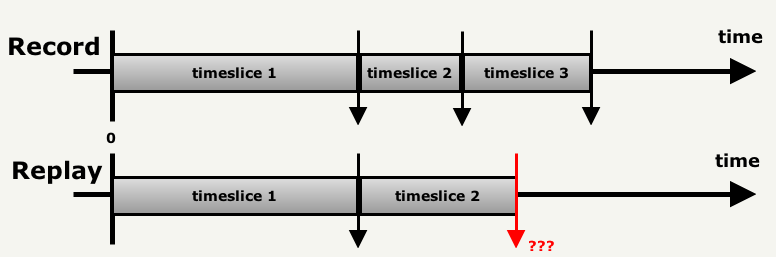

- Record user inputs and replaying a game session (for debugging or entertainment) becomes difficult since the timeslice are different. This is true even if the replay machine is the machine that originally recorded the session since the operating system system calls will never have the same duration.

This issue can also be solved by recording the simulation time along with inputs but the game engine design is now becoming messy. Besides, there is an other issue:

- If your game is network based and you need to run several simulations simultaneously on different machines with identical inputs: The time slices will not match and small difference will appear until the simulation on each machines are out of sync !

Solution: Fixed timeslices.

There is an elegant approach to fix everything at the cost of a small latency: Update the game timer at a fixed rate inside a second loop.

int gameOn = 1 int simulationTime = 0; while (1){ int realTime = Gettime(); while (simulationTime < realTime){ simulationTime += 16; //Timeslice is ALWAYS 16ms. UpdateWorld(16); } RenderWorld(); }

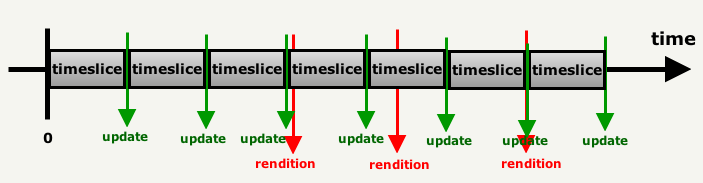

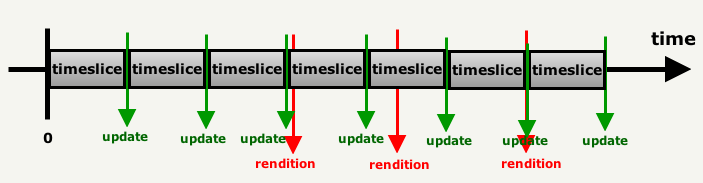

This tiny change dissociates rendition and simulation as follow:

The engine nows simulate the world with CONSTANT timeslices, regardless of network latency/renderer performances.

It allows simple and elegant code for replay recording/playback, solid collisions detection system... but most importantly it allows simulation running on different machines to be synchronized as long as the same input/random feeds are provided.

The engine nows simulate the world with CONSTANT timeslices, regardless of network latency/renderer performances.

It allows simple and elegant code for replay recording/playback, solid collisions detection system... but most importantly it allows simulation running on different machines to be synchronized as long as the same input/random feeds are provided.

Before you patent it...

The joy of "discovering" this approach was short lived: This system is very well known in the game industry and is actually the keystone of games such as Starcraft II or id Software engines !

Further readings

- Timestepping by Peter Sundqvist

- Fix your timestep by Glenn Fiedler: Take the idea further and also mentions interpolations.

Merry Christmas....

...and Happy Hacking guys ;) !

No comments:

Post a Comment